From local environmental justice issues to global phenomena such as climate change, complex problems often require systems thinking to address them. Since 2018, the National Science Foundation-funded Multilevel Computational Modeling project, a collaboration between the Concord Consortium and the CREATE for STEM Institute at Michigan State University, has researched how the use of our SageModeler system modeling tool and associated curriculum resources coupled with effective pedagogical strategies can scaffold students in modeling complex systems.

By sharing the project’s discoveries and insights, we hope that additional teachers will make use of these findings and report back both what works in their classrooms and what additional research is still needed. This interchange between classroom instructional practice and educational research is where equitable, large-scale improvements in STEM teaching and learning through technology can take place. Our mission at the Concord Consortium is to help drive that change.

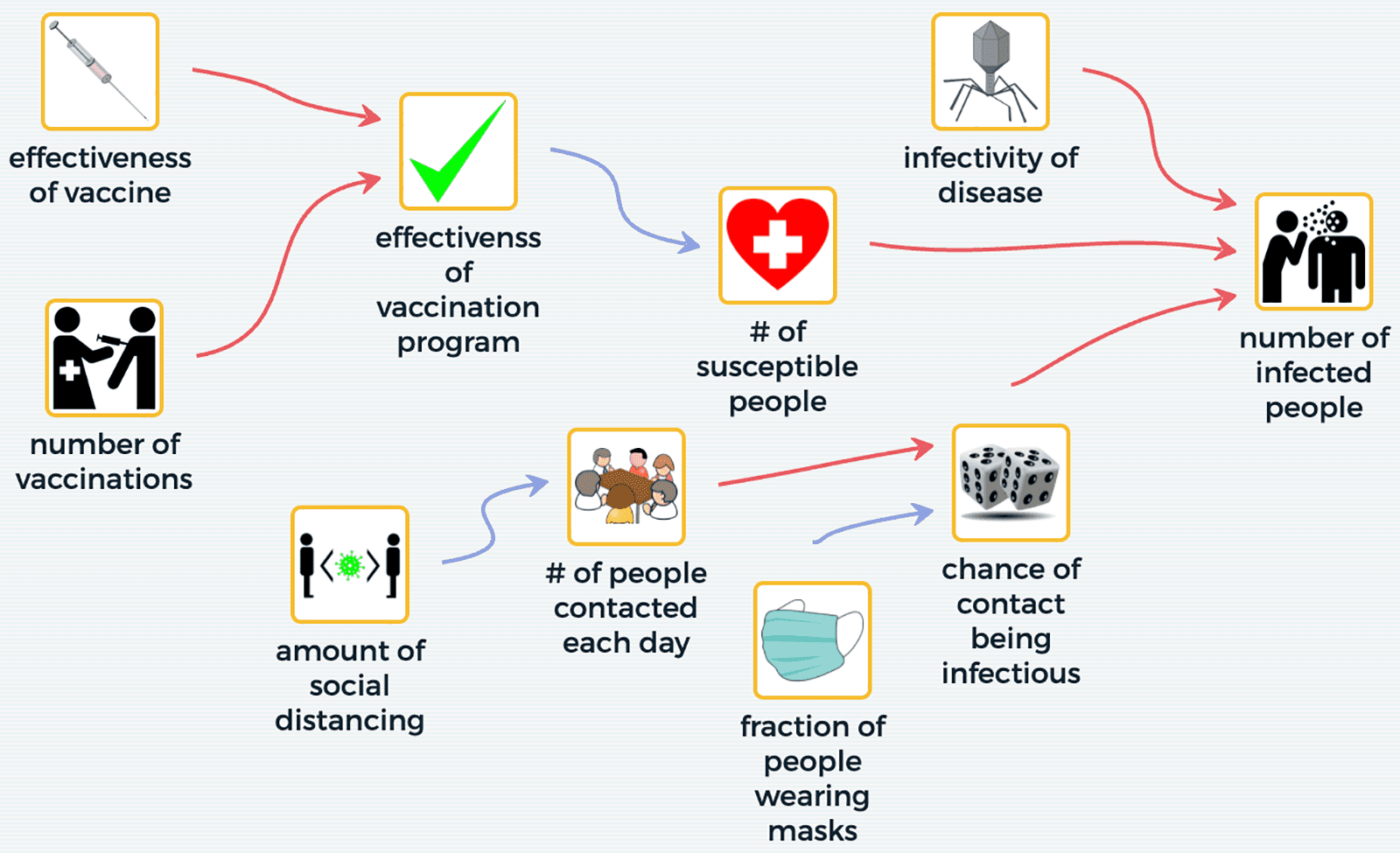

A model of an epidemic built in SageModeler. (Open in SageModeler.)

Aspects of system modeling

We studied a classroom implementation of a high school chemistry unit on the emergent properties of gases, and described how students engaged in four aspects of system modeling. We concluded with a set of recommendations for designing curricular materials that leverage digital tools to facilitate constructing, using, evaluating, and revising models, including 1) using evidence from real-world data to support model evaluation, 2) evaluating models in whole class and small group discussions, and 3) frequently revisiting the overarching phenomenon and the driving question the models are intended to address.

A framework for computational system modeling

We proposed a theoretical framework based on selected literature that uses modeling as a way to integrate systems thinking (ST) and computational thinking (CT). The framework for computational system modeling includes essential aspects of ST (e.g., defining a system, engaging in causal reasoning, predicting system behavior based on structure) and CT (e.g., decomposing problems, creating artifacts using algorithmic thinking, testing and debugging), and illustrates how each modeling practice draws upon aspects of both ST and CT to explain phenomena and solve problems. In our own work, we used the framework to guide the development of curriculum, support strategies, and assessments.

For example, in an article in The Science Teacher, we show how students interact with the various aspects of ST and CT as they build and refine computational models using SageModeler in a chemistry unit on evaporative cooling. Through specific classroom examples, including photographs of student experiments, screenshots of student models, and model design guidelines, we illustrate ways in which this framework can inform curriculum design and pedagogical practice. We invite teachers to apply the framework to additional curriculum units. We are delighted that other researchers are now citing the framework in their work in related fields.

Static equilibrium and dynamic time-based modeling

SageModeler offers three approaches to system modeling: 1) model diagramming, 2) static equilibrium modeling, in which a change in any independent variable instantaneously ripples through the system causing all other linked variables to change their value accordingly, and 3) dynamic time-based modeling with stocks (called collectors in SageModeler) and flows.

While linear causal reasoning—where one thing causes another, which causes another—can help students understand some natural phenomena, it may not be sufficient for understanding more complex issues such as climate change and epidemics, which involve change over time, feedback, and equilibrium. We compared static equilibrium and time-based dynamic modeling and their potential to engage students in applying ST aspects in their explanations of evaporative cooling. We found that using a system dynamics approach prompted more complex reasoning aligning with systems thinking. However, some students continued to favor linear causal explanations with both modeling approaches. The results raise questions about whether linear causal reasoning may serve as a scaffold for engaging students in more sophisticated types of reasoning.

In another study on a unit on chemical kinetics, we showed students’ increased capacity to explain the underlying mechanism in terms of change over time, although student models and explanations did not address feedback mechanisms. We also found specific challenges students encountered when evaluating and revising models, including epistemological barriers to using real-world data for model revision. Helping students compare their model output to real-world data is a critical piece of future work that we hope teachers and researchers will take up.

Eliciting student reasoning when building models

We found that students sometimes engaged in unexpected reasoning when describing their system models in a chemistry unit on gas phenomena. We reviewed transcripts of interviews, looking for instances where students explained their conceptions in detail. We then identified the questioning strategies that elicited these explanations. Some of the most productive questions asked about distal relationships between nonadjacent variables (for example, when A was connected to B, which was connected to C, we asked about A’s effect on C). Several students shared interesting and non-canonical reasoning that had not shown up during classroom instruction, leading us to argue that students engage in complex causal reasoning that may be implicit and unexpected, and that if this is not recognized, teachers cannot address it. We suggest that focusing on substructures within student models and using a simple questioning strategy shows promise for helping students make their causal reasoning explicit.

The importance of testing and debugging

Through testing and debugging system models, students can identify aspects of their models that either do not match external data or conflict with their conceptual understandings of a phenomenon, prompting them to make model revisions, which in turn deepens their understanding of a phenomenon. While this modeling aspect is challenging, we found that students can make effective use of different testing and debugging strategies, including 1) using external peer feedback to identify flaws in their model, 2) using verbal and written discourse to critique their model’s structure and suggest structural changes, and 3) using systematic analysis of model output to drive model revisions. Teachers can recommend and apply these practices.

Overall, we believe these contributions have moved the needle towards more teachers adopting the use of system modeling activities in their classes and helping students engage in systems thinking, computational thinking, and model building to understand and solve complex problems.

We invite you to test these ideas in your own educational practice using SageModeler in project-developed curriculum units and in your own curriculum as well. Please email sagemodeler@concord.org and let us know what you find.