Developing Assessments for the NGSS

The Next Generation Science Standards (NGSS) consist of performance expectations that combine three dimensions of learning: science and engineering practices, disciplinary core ideas, and crosscutting concepts. According to the NGSS these three dimensions should be integrated in curricula and assessments. Since previous standards separated content and practices such as inquiry, the new standards represent a significant shift and present a challenge for teachers and the wider assessment community, including publishers and organizations that create standardized tests. New types of assessments are needed.

The Concord Consortium in partnership with Michigan State University, the University of Illinois and SRI is researching and documenting a process for creating NGSS assessments and developing a set of exemplar assessments, targeting middle school physical science classrooms. These exemplars can be used for formative feedback to help teachers understand the progress their students are making in achieving specific performance expectations (PEs).

What implications do the new science standards have for assessment?

- The notion of an assessment “item” needs to be expanded to encompass more complex tasks.

- Assessments should measure all three dimensions of learning specified by the NGSS.

- Teachers should have multiple assessment avenues for gauging student progress toward broad performance expectations.

Typical assessment items involve relatively simple student responses—checking a box on a multiple-choice answer or providing a short written answer to an open-response question. Because NGSS performance expectations by definition include a “performance” aspect to them, assessment items should measure both content knowledge and performance. Take, for example, the following NGSS performance expectation:

MS-PS3-4. Plan an investigation to determine the relationships among the energy transferred, the type of matter, the mass and the change in the average kinetic energy of the particles as measured by the temperature of the sample.

The disciplinary core ideas in physical science are the relationships between energy, temperature and atomic-level particles. Several crosscutting concepts are addressed here, but the most relevant is “Energy and Matter: Flows, cycles, and conservation.” The science practice is “plan an investigation.” Thus, an assessment for this NGSS standard must be able to measure an action.

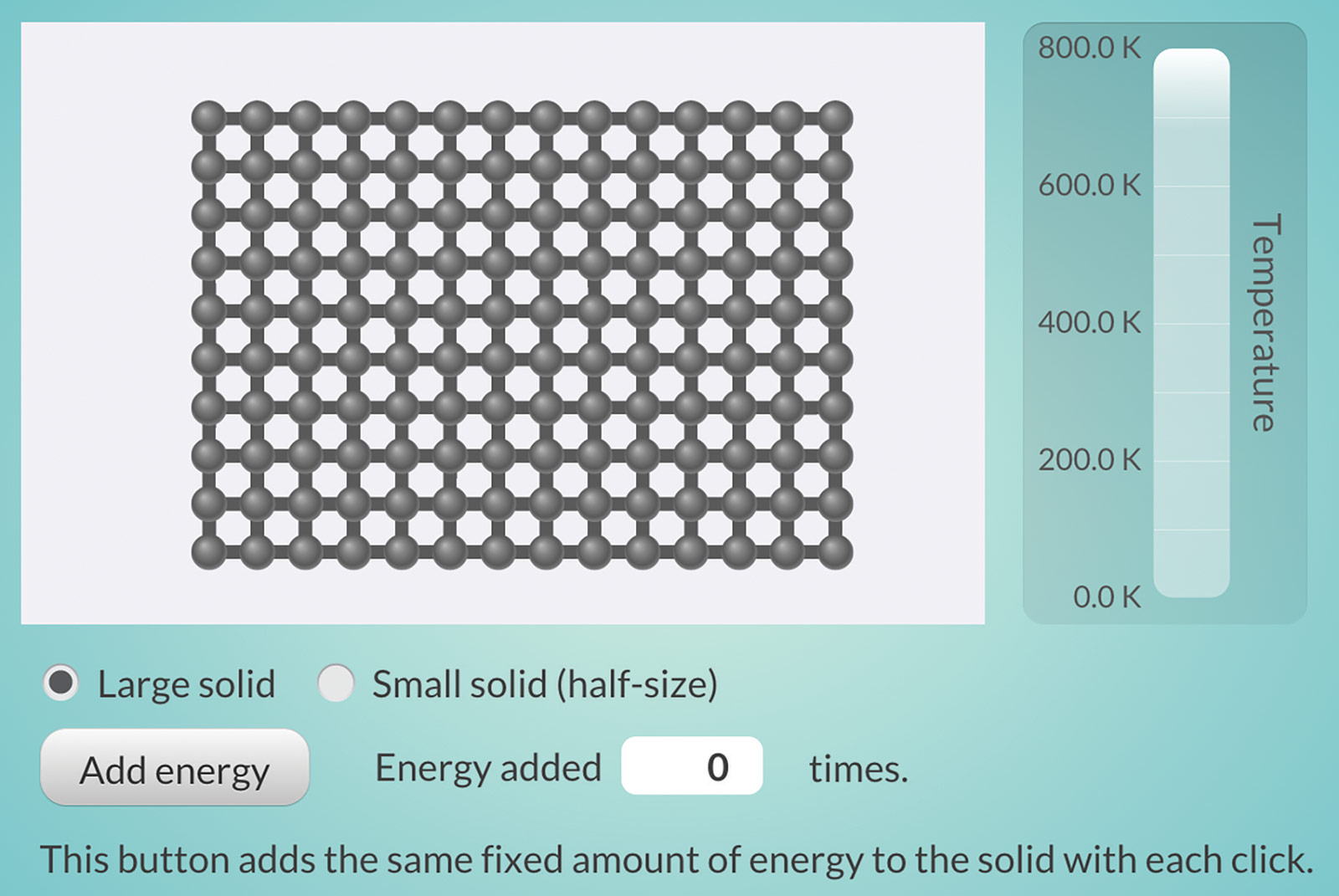

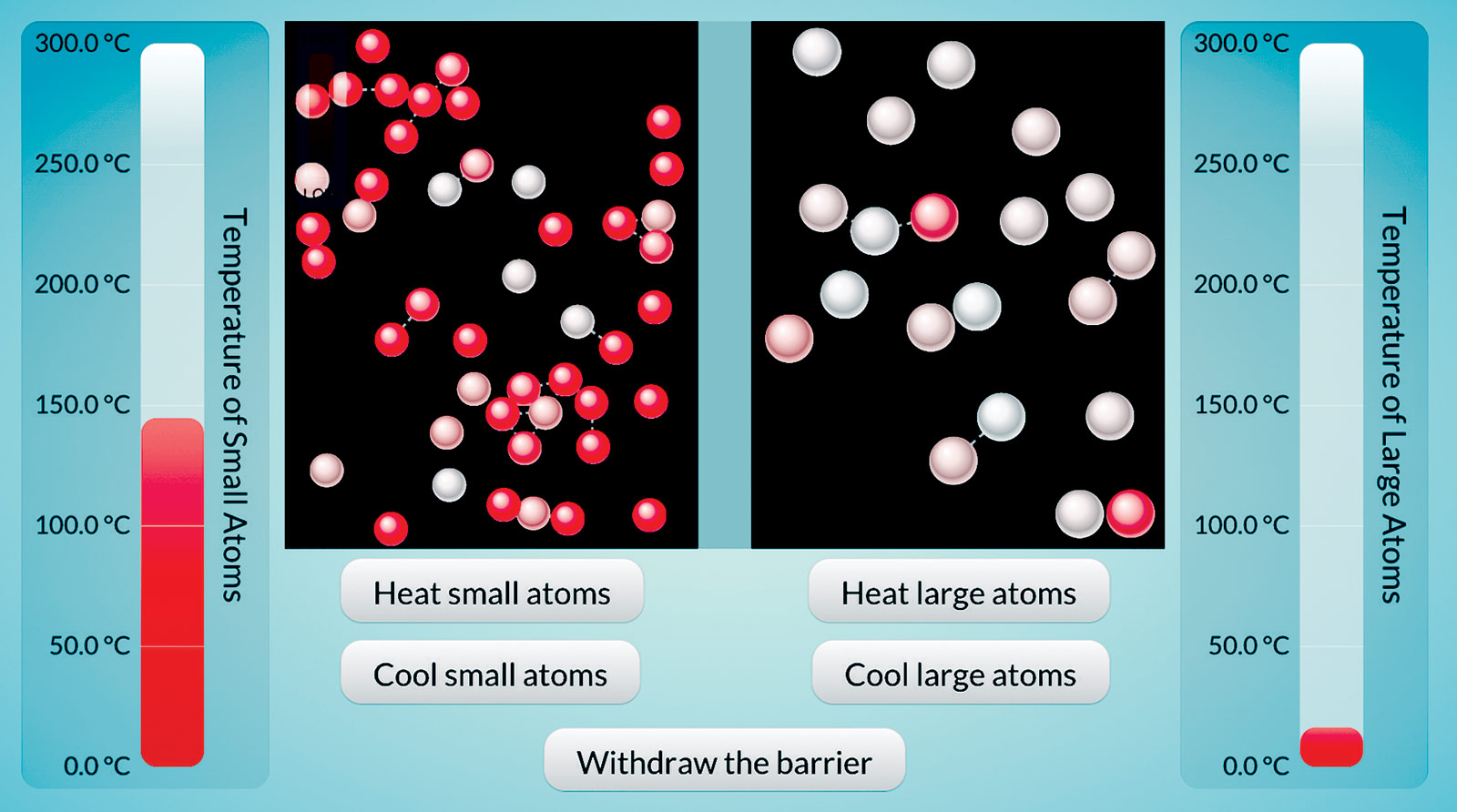

We developed two simulations that are incorporated into a set of assessment items related to this performance expectation (Figures 1 and 2). A single “item” could include a collection of question prompts and student interactions with a simulation. Using these simulations students can plan and carry out investigations. By collecting their annotations of snapshots of the simulations in various states, we can measure their performance. We are also working on logging student interactions with the simulations themselves, and will be exploring how to use this log data to provide further feedback on student strategies used to plan and carry out the investigation.

Another NGSS practice is “developing and using models.” The use of existing models, as exemplified by the simulations mentioned, falls naturally into this category, but to measure the development of students’ conceptual models we rely on our drawing tool to facilitate their expression. Simulations can provide a way for students to test their models and collect evidence in support of models they have developed. In addition to simulations, we include tabular results of experiments and video clips that demonstrate phenomena, all of which can be used as a basis for evidence in model development.

Developing learning performances

Many of the NGSS performance expectations broadly describe how students will demonstrate knowledge of a disciplinary core idea. The disciplinary core idea cited in a PE often can be linked with one or more additional PEs, so our first step is to identify related PEs and form clusters that we will unpack into an even finer grained set of PEs we call learning performances (LPs) (Figure 3).

Because the NGSS science practices are interconnected, we write learning performances to target a variety of practices that together support the development of the skill or skills cited in the overarching performance expectation cluster. In developing individual LPs we further unpack these, defining characteristic task features that are elements of all related assessment items, as well as variable task features that can change from item to item. We then develop a number of assessment items for each LP, run them through internal and expert reviews, conduct alignment studies to determine how well these align to the original PEs and pilot the items in various classrooms.

After analyzing data from our first pilot, we will develop statistical models to characterize the evidence produced by students and determine how this feedback can be used by teachers in the classroom. We will also use this analysis in the development process to revise and refine our items, scoring rubrics, delivery mechanisms and reporting features.

Our goal is to provide both a detailed description of how to develop NGSS assessment items and a set of example assessments for use in many classroom settings. We hope to provide guidance for curriculum developers who will be embedding formative measures in their materials and to prompt institutions that develop summative assessments to consider new ways of thinking about assessment item design for performance-based standards.

| We clustered two energy-related performance expectations: |

| MS-PS1-4. Develop a model that predicts and describes changes in particle motion, temperature, and state of a pure substance when thermal energy is added or removed. |

| MS-PS3-4. Plan an investigation to determine the relationships among the energy transferred, the type of matter, the mass and the change in the average kinetic energy of the particles as measured by the temperature of the sample. |

| We developed five learning performances specific to Grade 7 for the energy-related PEs above: |

| Students should be able to … |

|

LP 1: Evaluate the accuracy of an experimental design built to investigate how types of matter influence the magnitude of temperature change of a given sample when adding or removing thermal energy. |

| LP 2: Plan and carry out an investigation to explore how mass affects the change in temperature of a sample when adding or removing thermal energy. |

|

LP 3: Plan and carry out an investigation to explore the relationship between energy transfer and temperature changes. |

|

LP 4: Analyze and interpret data that describes the relationship between how mass and types of matter affect the change in temperature of a sample when adding or removing thermal energy. |

| LP 5: Construct an explanation that links energy transferred, the type of matter, the mass and the change in the average kinetic energy of the particles as measured by the temperature of the sample. |

Figure 3. A performance expectation cluster based on related disciplinary core ideas and corresponding learning performances that draw on multiple interrelated practices.

Dan Damelin (ddamelin@concord.org) is a technology and curriculum developer.

This material is based upon work supported by the National Science Foundation under grant DRL-1316874. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.