Automated Scoring Helps Student Argumentation

For the past four years, a robot avatar named HASBot has provided feedback to hundreds of middle and high school students on how to write strong scientific arguments. HASBot—a combination of High-Adventure Science or “HAS” and “Bot” as in automated scoring robot—came to digital life through the National Science Foundation-funded High-Adventure Science: Automated Scoring for Argumentation project. In partnership with the Educational Testing Service (ETS), we integrated HASBot into two curriculum modules in which students use models and real-world data to investigate issues related to humans and environmental sustainability.

In the “What is the future of Earth’s climate?” module, we paired HASBot with eight argumentation item sets. Each item set consists of four parts: a multiple-choice claim, an open-ended explanation, a Likert scale certainty rating, and an open-ended certainty explanation. HASBot provided students with feedback on their typed explanation and certainty rationale responses.

Training HASBot

The ability to provide students with meaningful feedback on their open-ended written responses requires computers to “learn” from humans first. A computer needs to be “taught” the features of a good argument through a method of machine learning (ML) called natural language processing (NLP). This is not trivial and includes several steps for this process to work.

First we needed to identify the range of students’ responses. During the six years of the High-Adventure Science project, dozens of teachers used the climate module with their students. We developed rubrics and scored between 1,200 and 2,000 scientific explanation and certainty explanation responses for each argumentation item set. As a result, we were familiar with the language students used to explain their claims and certainty ratings. Based on our knowledge of how students express themselves, we then developed a new rubric structure that would allow us to provide specific types of feedback.

To train the automated scoring models, the human-scored responses were input into c-rater-ML, an NLP system developed by ETS. The automated scoring models were compared to the human scores, and the algorithms were adjusted until the automated scoring model output matched human scores at least 70% of the time. Even though automated scoring is not perfect, it is good enough to provide students with timely, targeted, and consequential feedback to help them improve their arguments with data and reasoning.

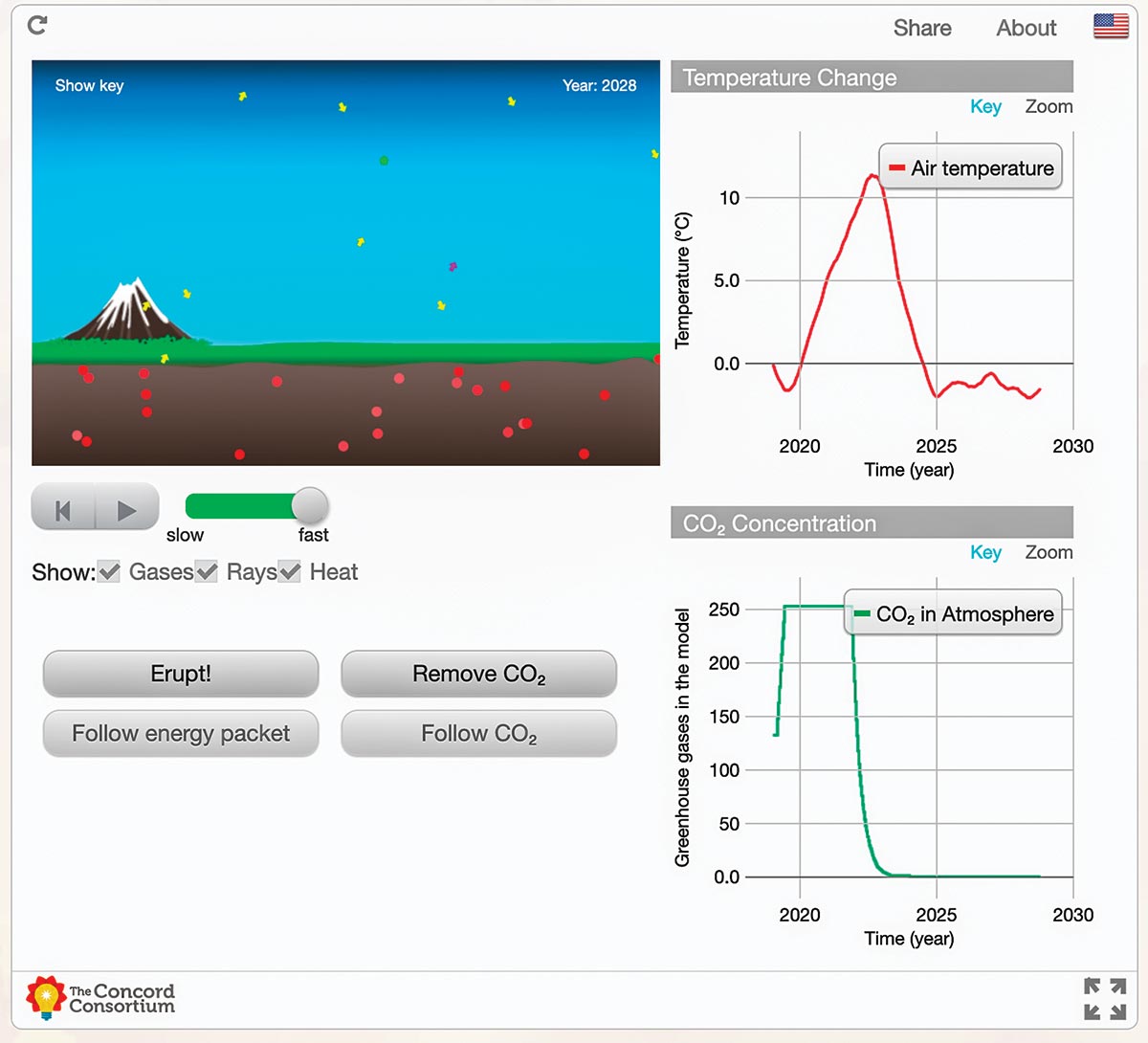

In one of the climate module activities, students use a model to determine the effect of carbon dioxide on atmospheric temperature. Students add and remove carbon dioxide in the model and observe the interactions of solar and infrared radiation with carbon dioxide. Graphs of model outputs help students determine the relationship between carbon dioxide and temperature (Figure 1).

In the argumentation item set that follows the model, students make a claim about what happens to the temperature if all of the carbon dioxide is removed from the atmosphere. Students explain their claim, make a certainty rating, and describe what influenced their certainty. Each of these questions contains a hint about how to select a claim, the components that make up a good explanation, and factors that might influence one’s certainty about a particular claim. After completing the argumentation item set, students submit their responses. Within four seconds, HASBot provides specific feedback on their explanation and certainty rationale responses.

The feedback is designed to complement the hints embedded in each question, helping students recognize the features of a complete scientific argument. After receiving feedback, students can edit their responses.

HASBot’s feedback and one student’s responses

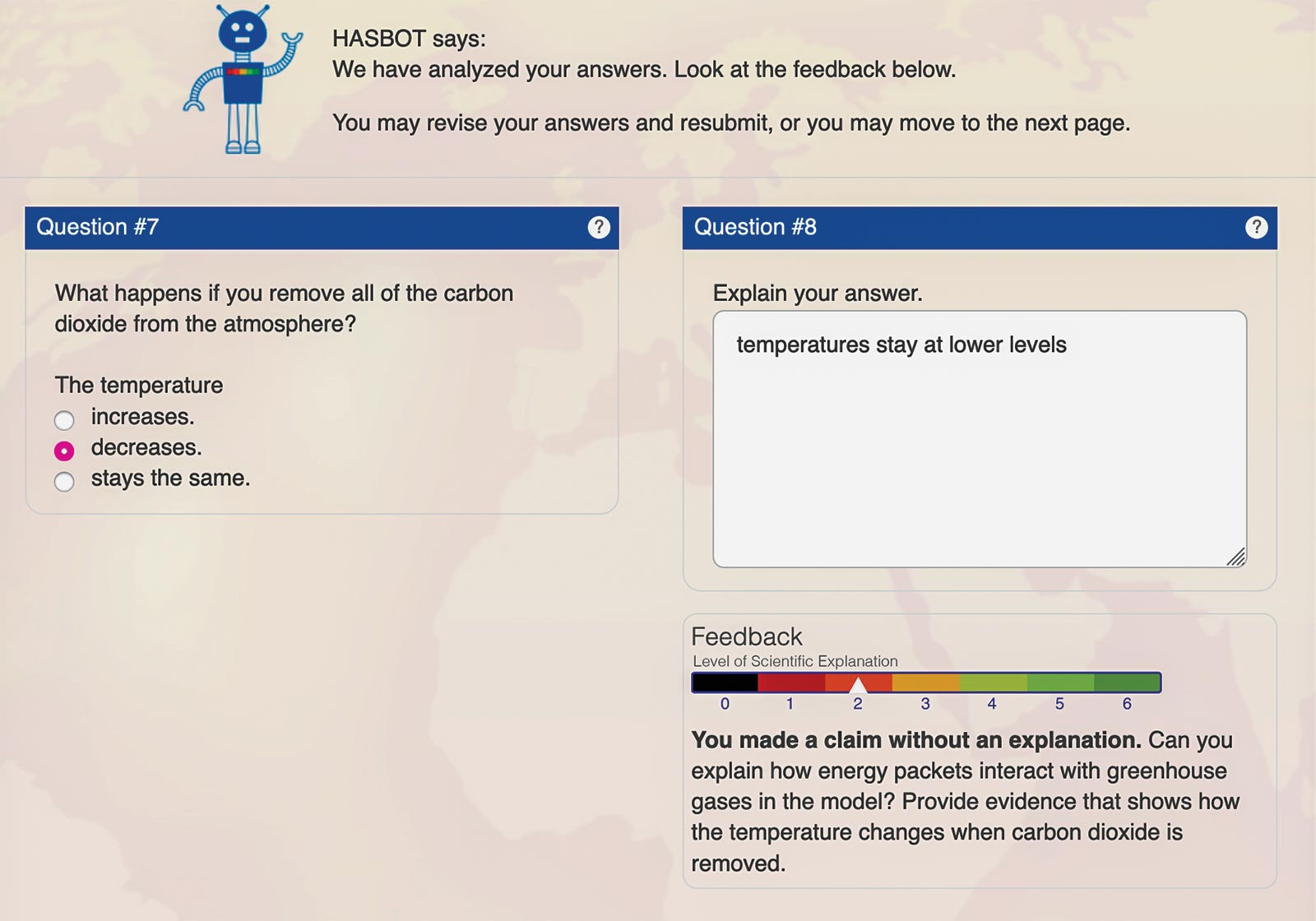

Now let’s follow one student, Tom, to see the role of HASBot’s feedback in the modifications Tom makes to his explanation responses. When Tom submits his first response, he repeats his claim that the temperature decreases when carbon dioxide is removed from the model (Figure 2). He does not cite specific evidence from the model or any reasoning to explain why the temperature would decrease, so HASBot suggests he support his claim with evidence and reasoning. Tom receives a score for his scientific explanation in a rainbow bar. The bold text below the bar describes his score in a diagnostic statement, while the plain text includes suggestions for improving his argument. The feedback is different for each level of the scientific explanation rubric (scores 0 through 6).

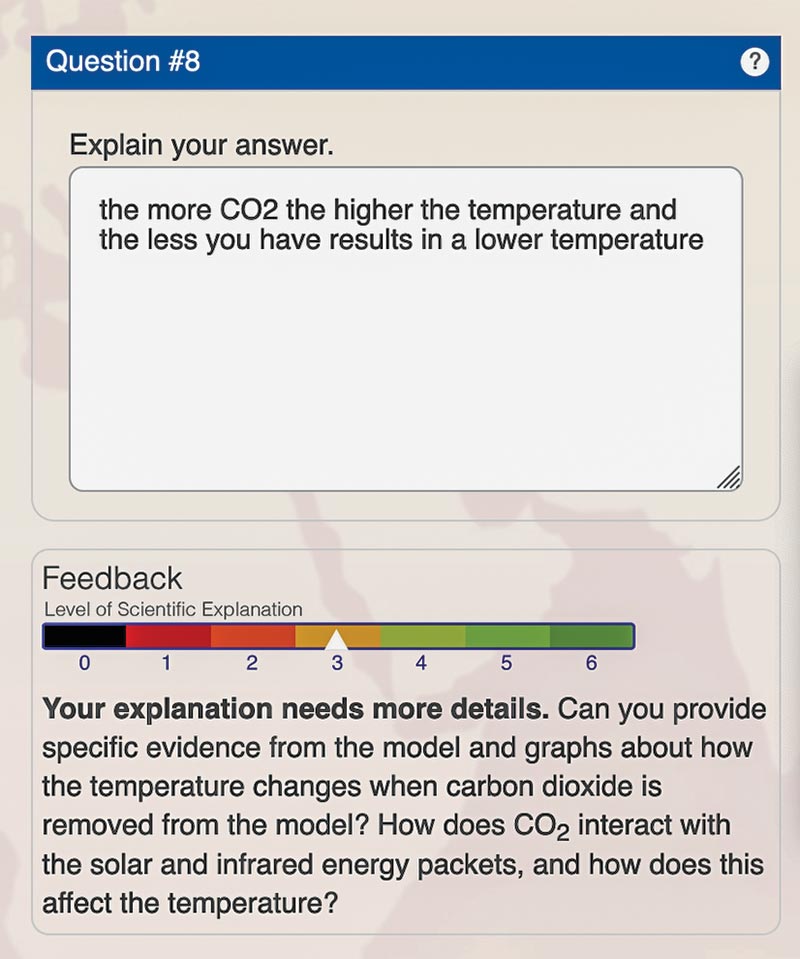

Tom then edits his response and submits a second time, this time citing a positive correlation between the amount of atmospheric carbon dioxide and temperature (Figure 3). In response, HASBot recommends that Tom provide specific evidence from the model and graphs, as well as reasoning about greenhouse gas and solar radiation interactions to support his claim.

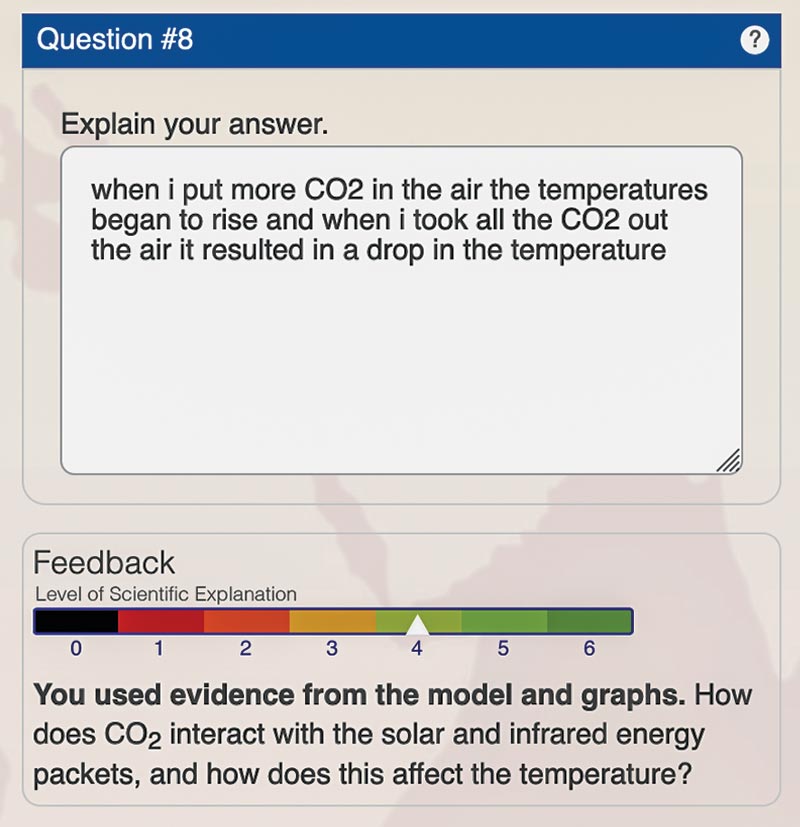

When Tom modifies his answers for a third submission, he includes specific data from his experiments with the model, explaining that adding CO2 in the model results in a temperature rise and removing CO2 in the model results in a temperature decrease (Figure 4). This is an improvement, but it is not the most complete explanation possible. If Tom had included reasoning about why the temperature increases (the mechanism of greenhouse gas and radiation interactions), he would have received the highest scientific explanation score.

The explanation feedback is designed to encourage students to include both data and reasoning in the explanations of their claims. HASBot provides similar targeted feedback to students’ certainty rationales, encouraging them to consider their certainty with the data and evidence that was presented.

The role of the teacher in providing feedback

While HASBot prompted Tom to modify his responses, the teacher must also be kept in the loop to help students make sense of the feedback and underlying scientific concepts. We developed a teacher dashboard that allows the teacher to quickly scan color-coded scores to monitor progress of the whole class. The teacher can see each student’s scores and assess whether a student is improving over the course of one or more responses, or if a student is stuck on a particular score, perhaps because he or she is struggling with the science content or the more general understanding of claim, evidence, and reasoning. While HASBot is designed to help students, ultimately the teacher decides how to engage individual students or the entire class for a deeper discussion. Even a trained robot is no substitute for a classroom teacher.

Sarah Pryputniewicz (spryputniewicz@concord.org) is a research assistant.

Amy Pallant (apallant@concord.org) is a senior research scientist.

This material is based upon work supported by the National Science Foundation under grant DRL-1418019. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.