Under the Hood: Hands-on Interactive Activities with Leap Motion

Three years ago, Leap Motion, Inc. released a small USB device that had the potential to offer a completely new way for people to interact with computers. Using a combination of three infrared LEDs and two cameras, the device can track the position and movement of hands in three dimensions, enabling gesture-based controls for applications. A welcome change from over 30 years of clicking a mouse.

The GRASP (Gesture Augmented Simulations for Supporting Explanations) project is leveraging this capability and using the Leap Motion controller in desktop mode to capture hand movements and control web applications. (Learn more in “GRASPing Invisible Concepts” in the spring 2016 @Concord.)

With the Leap V2 SDK, defining gesture-based interactions for controlling a web application via JavaScript becomes quite simple. Events from the Leap are captured in the processLeapFrame function:

processLeapFrame(frame) {

const hands = frame.hands;

const data = {};

data.numberOfHands =

hands.length;

…

}

Frames are snapshots of data sent from the Leap device to the application multiple times per second. From this frame data, we can determine the number of hands in view. We can also determine the angle of each tracked hand using hand.roll() and the relative velocity of each hand using hand.palmVelocity. Many other types of gestures can be detected, including pinch, grab, and individual finger positions.

With the Leap API, the data returned from the cameras is translated through the API, describing each of these gestures with an additional metric—the level of confidence of detection. The Leap hardware uses infrared cameras to detect movement, so bright sunshine can impair the ability to track hands. Overlapping hands are not easily identified, which can also impact the confidence level and can affect tracking performance. The design of a gesture-controlled application must take into consideration both the ease of performing a particular gesture by the user and the likelihood that the device will recognize that gesture.

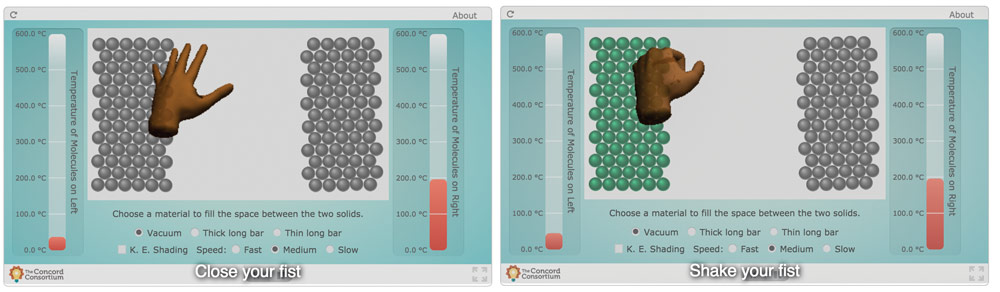

The gestures that have proved most successful for GRASP in terms of their ability to be recognized reliably are those that involve significant hand movement and rotation. In our Molecular Workbench heat transfer simulation, for example, the user must “grab” a molecule and shake it to initiate interaction (Figure 1). Detecting a closed fist can be configured and adjusted:

const hand1 = frame.hands[0];

const hand2 = frame.hands[1];

let closedHands = 0;

if (hand1 && hand1.grabStrength > this.config.closedGrabStrength) {

closedHands += 1;

}

if (hand2 && hand2.grabStrength > this.config.closedGrabStrength) {

closedHands += 1;

}

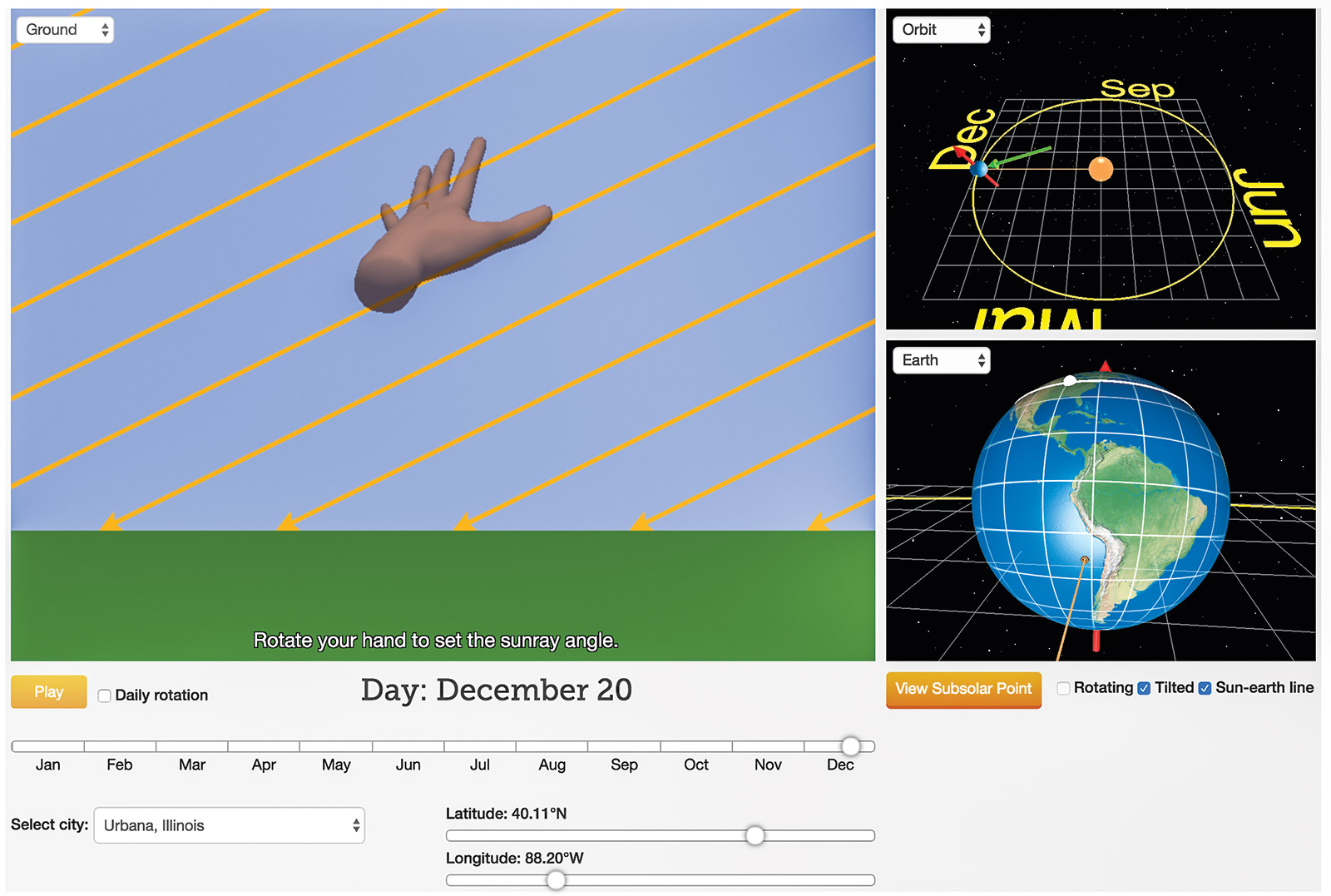

In our seasons simulation (Figure 2), the angle of the sun’s rays relative to the ground is controlled by the roll of the detected hand, with some tolerance adjustments:

function isPointingLeft(hand) {

return

angleBetween(hand.palmNormal, PALM_POINTING_LEFT_NORMAL)

< HAND_POINTING_LEFT_TOLERANCE;

}

Being able to interact with simulations via hand gestures supports GRASP research goals. The Leap Motion device with the V2 SDK makes it possible within the context of a web browser.

Christine Hart (chart@concord.org) is a senior software developer.

This material is based upon work supported by the National Science Foundation under grant DUE-1432424. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.