Under the Hood: Sharing the Sky in an AR Planetarium

“Look over there, that’s Orion. And there’s Ursa Major.” If you can you pick out the stars in the night sky and understand how they move, there’s a good chance someone taught you by pointing with outstretched arm to the landmark constellations, marking the paths across the sky, and dividing the myriad points of light into recognizable patterns. Knowledge of the night sky tends to be passed on through an oral tradition combined with physical gestures.

Traditional ways of learning about the stars and motions of the sun, moon, and planets employ elements common to augmented reality: collaboration and embodiment. The CEASAR project (Connections of Earth and Sky Using Augmented Reality) is researching methods of collaborative problem-solving using augmented reality (AR). With AR, users see their surroundings superimposed with digital objects, making collaboration possible because learners can see and communicate with those around them. But there are few educational examples of AR. Our goal is to demonstrate the benefits of an immersive augmented reality platform for collaborative learning and problem-solving.

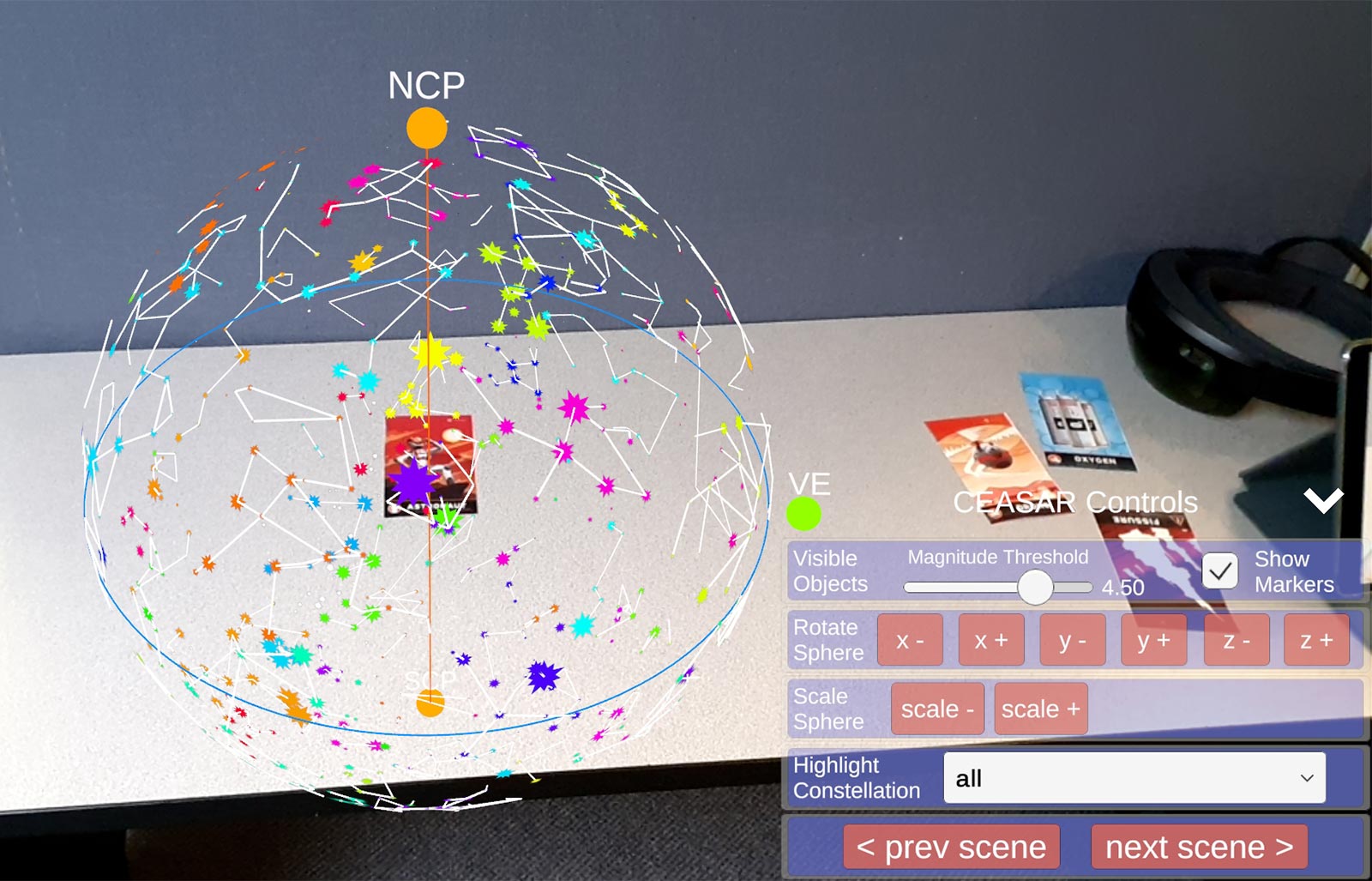

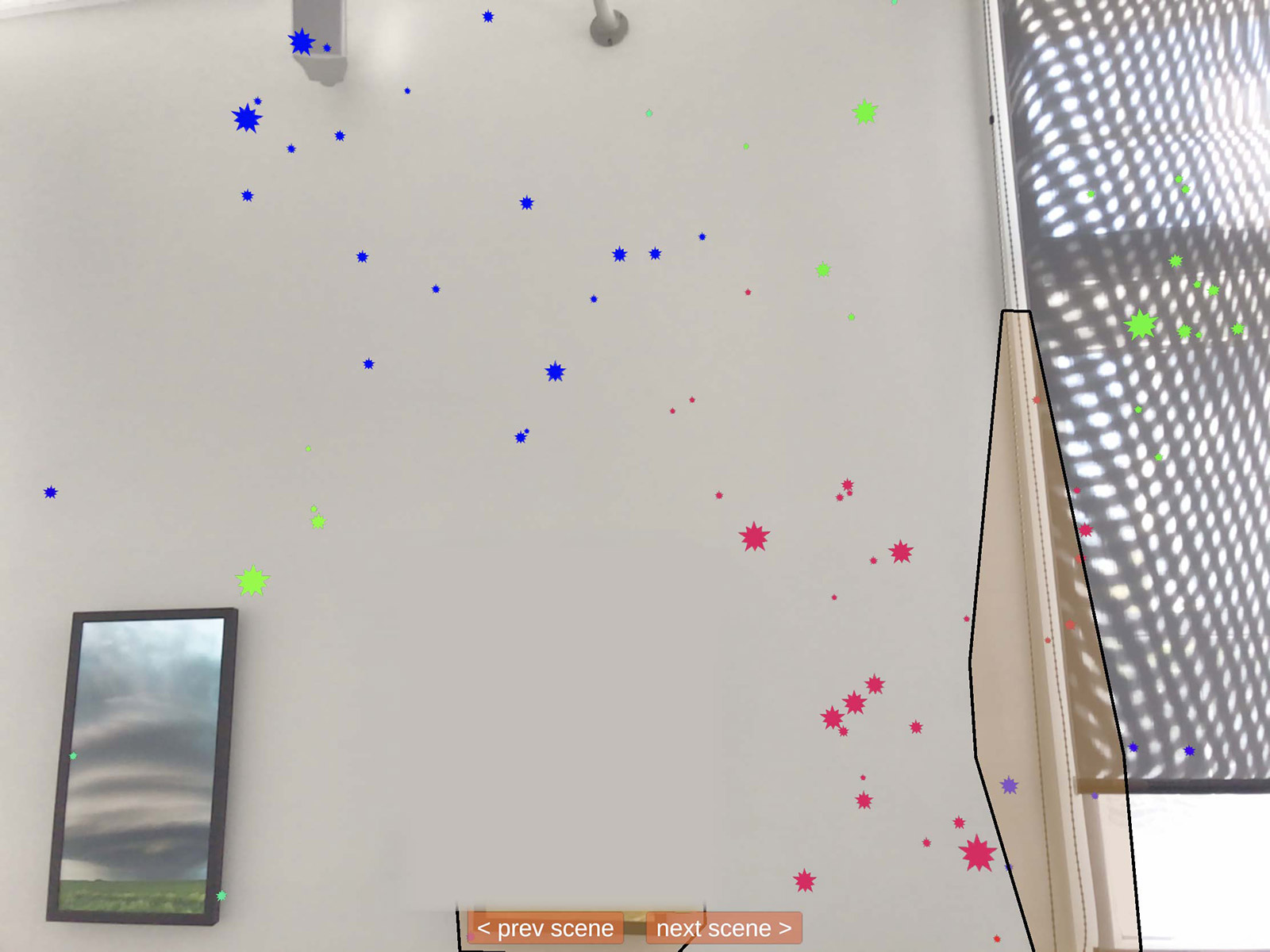

CEASAR is building on social, situated learning that incorporates dialogue, gesture, and 3D learning to teach about astronomy. We are developing a model of the sun, moon, and stars that mimics a real view of the sky surrounding the user, like a planetarium (Figure 1). Students can opt for multiple perspectives, including both the classic celestial sphere model (Figure 2), which resembles a “ball of stars” with the Earth at the center, and the familiar heliocentric solar system model (Figure 3).

The 3D holographic models are superimposed in physical space, like a classroom. With devices to view the models, students learn about the heavens using gestures and conversation to share observations. They are able to move around and change their gaze, and collaborate with others who may be viewing different models or looking at the sky from different vantage points.

The models run on Microsoft’s HoloLens, a self-contained wearable holographic headset with a great degree of 3D immersion and the ability to capture the user’s gestures. We will also support more common devices typical in classrooms, including tablets and phones. To do so, we’re leveraging the cross-platform publishing features built into the Unity game engine to render the same star models on a web client, a native desktop application, and on both mobile devices and the HoloLens. We are currently able to render the brightest 9,000 stars (from a free database) in 3D, projected onto the surface of a sphere that can be viewed from inside or outside the orb. Using calculations for date and time, we are able to rotate this celestial sphere to any moment in the past or future.

We have also implemented networking using the multiplayer game server Colyseus running on Heroku in order to allow users on different devices to share their observations. Students can now highlight constellations to share with a friend—just as if they were pointing to the real night sky.

Nathan Kimball (nkimball@concord.org) is a curriculum developer.

Chris Hart (chart@concord.org) is a research scientist.

This material is based upon work supported by the National Science Foundation under grant IIS-1822796. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.