GRASPing Invisible Concepts

“Hand waving” is often used to characterize a nebulous explanation that’s short on details. But could the literal waving of hands—or other gestures and movements of the body—be key to the process of reasoning about scientific concepts? The notion that human cognition is rooted in the body is not new, and a growing research effort is emerging to test the idea. So, how can we determine the relationship between body motion and learning? If we uncover a relationship, can we use that knowledge to enhance learning? Finally, is it possible to use discoveries from this research to create learning environments using body motion to help students build better mental models of difficult concepts?

The GRASP (Gesture Augmented Simulations for Supporting Explanations) project, funded by the National Science Foundation, is exploring these questions by investigating how middle school students learn important science topics that are difficult because their explanations are hidden from everyday experience. For example, middle school students learn that conductive heat transfer is caused by interactions resulting from the ceaseless motion of molecules of matter. However, few are able to explain the warming of a spoon handle set in a cup of hot tea. Students do not have robust mental models on which to build explanations for such abstract, unseen causes. In this case, they have only a vague view of the underlying particulate nature of matter.

The Concord Consortium developed the Molecular Workbench (MW) software engine and hundreds of simulations built with it to help students visualize the interactions of atoms and molecules. We have found that students from kindergarten through college can learn the patterns of movements of the unobservable world of particles by experimenting with MW simulations, and they can explain the causes of phenomena such as heat transfer or the gas laws using mechanistic arguments. The GRASP project is now examining more closely how students learn with simulations and how to enhance learning through gestures.

Research

The research of learning with body motion or embodied cognition is led by Robb Lindgren, Principal Investigator, David E. Brown, Co-Principal Investigator, and their graduate students at the University of Illinois at Urbana-Champaign (UIUC). They have constructed a framework for the detailed investigation of students’ learning and the use of body motion using one-on-one interviews with students. The researcher’s role is to ask questions that uncover the student’s initial understanding of the topic and to provide both physical and computer models, relevant facts, and scaffolding questions to help orient students and build their understanding while repeatedly asking for refined explanations. Interviews may last up to 40 minutes, although the nature of the questions varies depending on the student’s understanding.

Often, students incorporate hand motions to help explain their ideas, and the interviewers encourage them with the goal of discovering what gestures students naturally find helpful. If students are reluctant to gesture, the interviewer prompts them to try different motions that evoke a mechanism or process in the phenomena to see if it helps build their understanding. For example, to demonstrate how molecules create pressure on the plunger of a piston, the student would tap their fingers or fist into an open palm, tapping quickly for high pressure and slower for low pressure.

The interviews provide a rich view into student thinking and have yielded new insights about the way gestures can enhance students’ ability to explain difficult phenomena. Our research has found that the role of gesturing in explanation takes on a variety of forms. Students may develop gestures spontaneously in the course of an explanation, sometimes using gesture as a tool for thinking through physical actions, even watching their hands while describing the motion. For other students, gestures develop seemingly subconsciously, their hands constructing tentative representations of the ideas they are formulating. In interviews, researchers have tried to make students aware of their hand use and have them develop it further. Analysis of the interviews shows that acknowledging and having students reflect on and refine gestures improves explanations. Bringing gesture to a conscious level appears to be a useful scaffolding tool, which indicates that it may present a pedagogical opportunity when used with new technologies.

Gesture input technologies

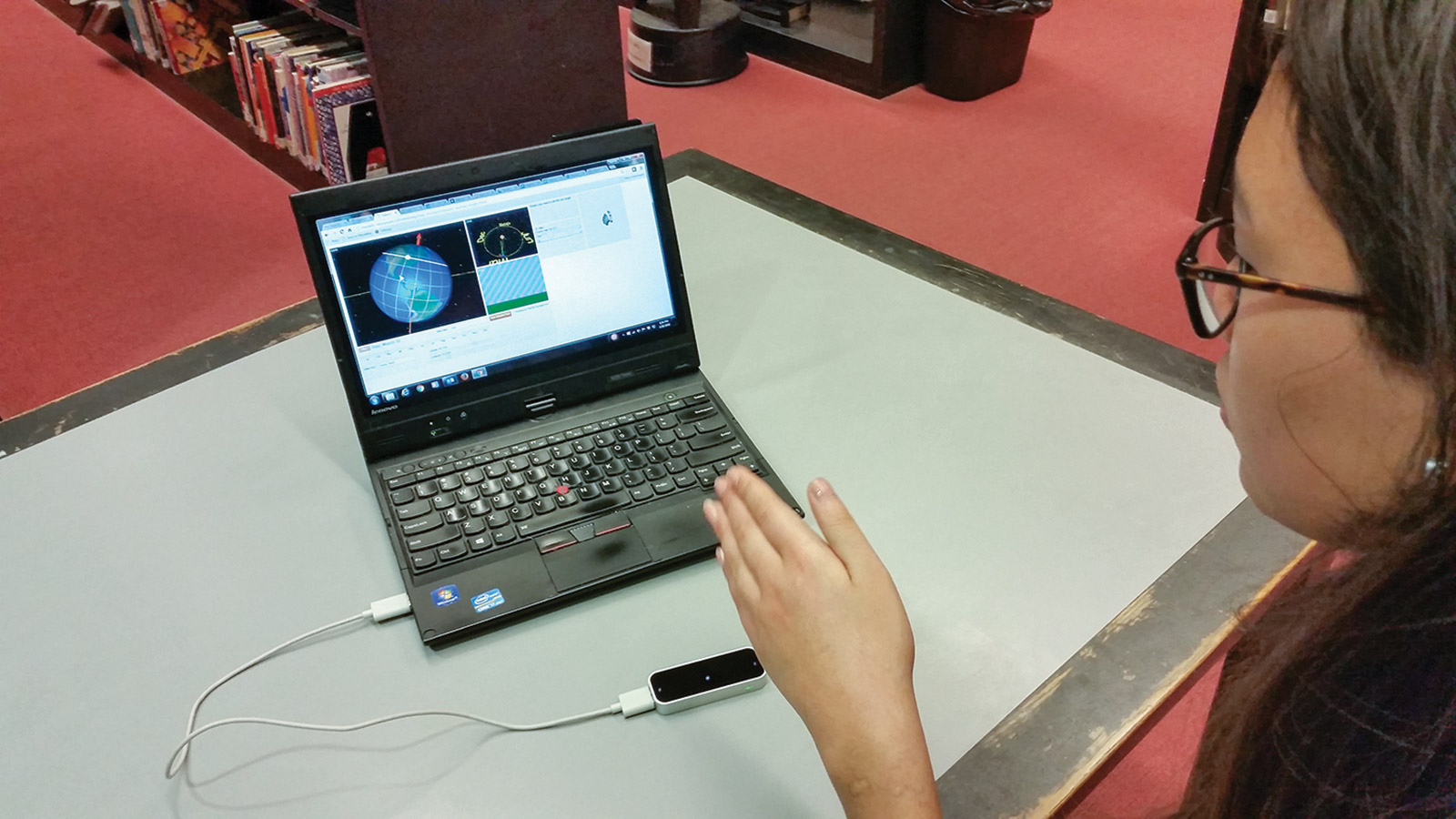

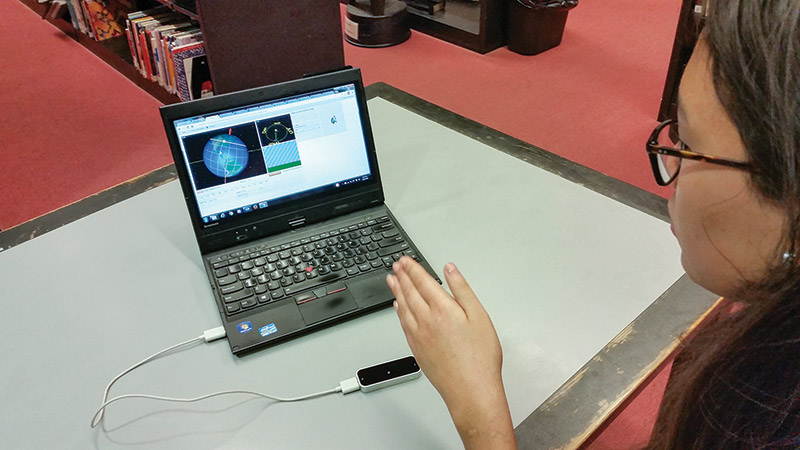

The UIUC GRASP team and the Concord Consortium are currently exploring the synthesis of the learning potential of computer simulations with the scaffolding benefit of gestures by using new computer input technologies to control the simulations using body motion. Gesture input devices have been around for quite a while, with Microsoft Kinect as perhaps the best known example popular with gamers. More recently, simpler and less expensive technologies have emerged. Our initial work utilizes the $80 controller made by Leap Motion for its low cost, small size, ease of installation, cross-platform (PC and Mac) flexibility, and compatibility with our browser-based online simulations. Gesture control is not used to operate the computer’s “widgets”—buttons, sliders, or checkboxes—but to manipulate the central actions and elements of the phenomena the simulations represent. Gesture input should allow students to feel and participate in the phenomena.

The focus of our work at the Concord Consortium is to apply what we have learned from the interviews to design and build gesture-controlled simulations that also take into account the capabilities of the Leap Motion controller. Although the Leap is made to detect hands, it does not reliably detect motions of the fingers in all orientations, and the user’s hands may obscure each other relative to the device. Since the technology has not reached the point where any imagined motions can be detected, we have sometimes in our software designs modified the most physically meaningful motions so the device can interpret them. We are also working on designing a user interface that will seamlessly instruct the user how to interact with the simulation while providing for students’ inquiry and experimentation.

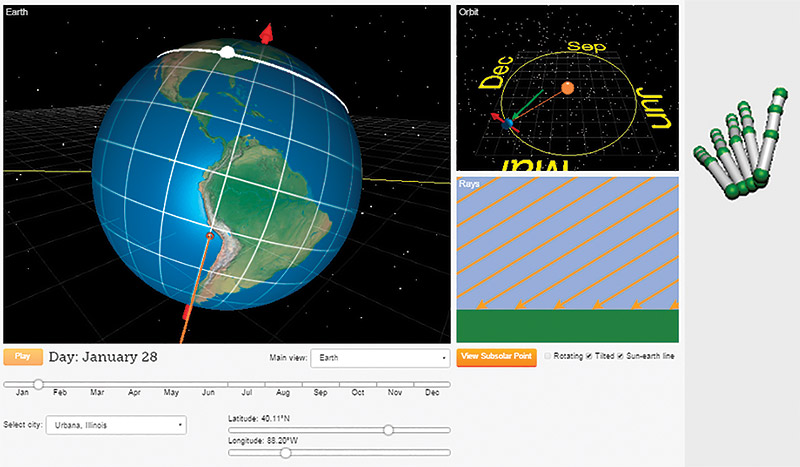

Our three emerging gesture-based simulations—molecular heat transfer in solids, the pressure-volume gas law relationship, and the causes of the seasons—are being tested with students using a similar interview format. The interview protocol still requires that students explain their evolving ideas, but attention is now focused on students’ interaction with the simulations to assess how well students can control them and what they notice and learn. These interviews also include “challenges” where students are asked to use gestures to affect some change in the simulation and to describe what they think their gestures represent and what effect they have on the system.

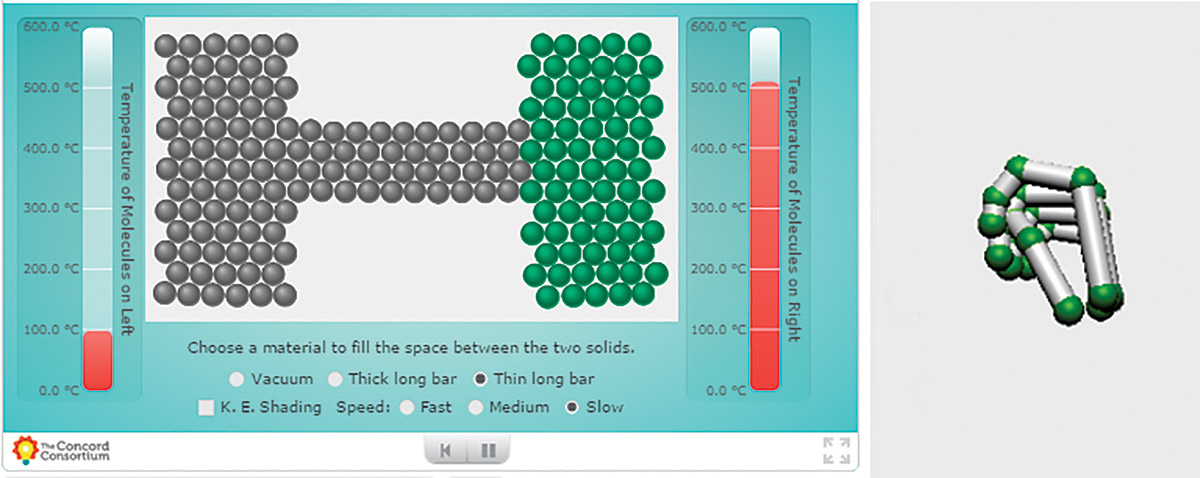

To control the heat transfer simulation (Figure 1), students are able to select with their right or left hand one of two different blocks of molecules that represent solids. Depending on the rate at which they shake their hand, students can directly manipulate the vibrational speed of the molecules, thereby changing their temperatures. Students can see how the oscillations are transferred by the collision of molecules as the system equilibrates.

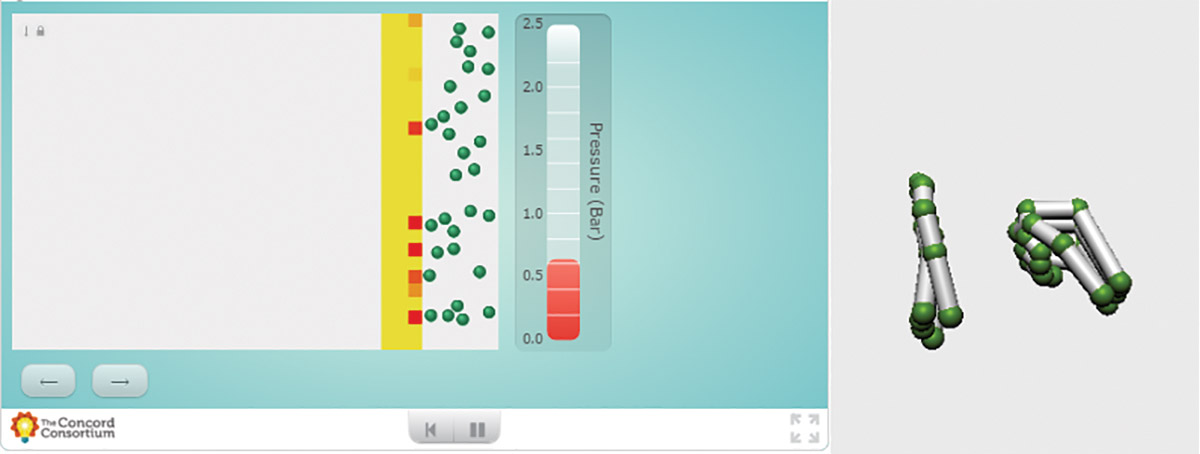

For the gas laws simulation (Figure 2), the central learning objective is the cause of pressure—that gas molecules in an enclosed vessel hit the surfaces harder and more frequently as pressure increases. In some of our early interviews, students represented pressure with the fingers or fist of one hand as molecules striking the other palm, as noted above. We are now testing this controlling gesture in the simulation, so that increased pressure decreases the volume that encloses that gas. This is a kind of reverse causality: generally, we think of decreasing the volume of a gas to increase the pressure. But our approach highlights the mechanism of pressure as the rate of the beating of molecules. Our seasons simulation (Figure 3a) uses a similar reverse causality to look at the angle of sunrays hitting the surface of the Earth as the major cause of the seasons. Here, we use the angle of a tipped hand to represent the sunray’s angle, which controls the Earth’s orbit (Figure 3b).

Our goal is to test the effectiveness of these and other gesture-based approaches to interacting with simulations of scientific phenomena. We hope to emerge with new insights for the field of embodied cognition and its direct application to new learning environments.

Nathan Kimball (nkimball@concord.org) directs the GRASP project at the Concord Consortium.

This material is based upon work supported by the National Science Foundation under grant DUE-1432424. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.