Practice SPARKS Perfection in Students

Formative assessments in electronics transform novices into experts.

Students working toward a technical degree need to master certain routine skills before they can manipulate more complex systems. In electronics, for example, the ability to read a resistor color code and to use a multimeter must become second nature. Unfortunately, instructors do not have time to stand behind every student, mentoring them during the many hours that it takes for novices to become experts at operating lab equipment.

“It’s really cool! It tells you exactly what you did wrong.”

The SPARKS project in electronics not only logs student errors, but also provides students with useful feedback on how to improve their performance and hone their skills.

SPARKS, which stands for Simulations for Performance Assessments that Report on Knowledge and Skills, builds upon the successful logging functionality of our recently completed Computer-Assisted Performance Assessment (CAPA) project. Supported by the Advanced Technological Education Program at the National Science Foundation, and working closely with research partners at CORD in Waco, TX, and Tidewater Community College in Virginia Beach, VA, SPARKS is creating interactive assessments that monitor student performance and offer insightful critiques and helpful hints.

In pilot tests we have found that this kind of scaffolded practice appeals to motivated students. Our personalized guidance can help these students build proficiency.

While CAPA focused on summative assessment for certification in electronics, SPARKS, which covers 14 topics in the basic electronics curriculum, is creating formative assessments that enable students to practice their skills and improve their performance in a safe, supportive environment. The assessments are aligned with a college-level introductory course in electronics and constitute a sequence of realistic tasks of increasing complexity. The software runs in a browser and does not require special software.

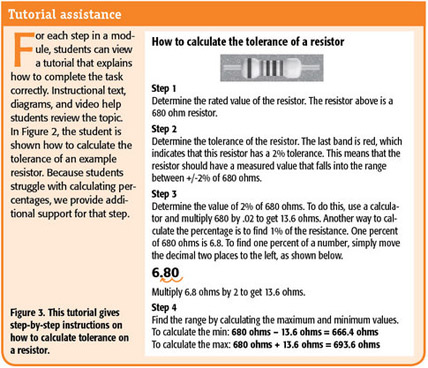

In the first module, Measuring Resistance, students are presented with a random resistor and asked to complete three tasks. First, they must interpret the color bands to provide the rated resistance and tolerance of the resistor. Next, they are asked to measure the resistance directly, using a digital multimeter. Finally, they must calculate the tolerance range and decide whether the resistor is within tolerance. These tasks are relatively complex and there are many ways in which beginning students can go astray. Accordingly, we have developed a comprehensive rubric for scoring the students’ performance and giving them the detailed feedback they need to improve. Students are encouraged to run the assessment multiple times. They can see their progress because the computer keeps track of their scores.

Reporting rubrics

After students complete the steps in an assessment, they receive a report that explains exactly what they did, including the proverbial good, bad, and ugly. Did the student get a correct value (good), but with an incorrect unit (bad)? Did they cause a virtual fire (ugly)?

Students not only see the correct answers, but also suggestions on how to improve the process for using the tools and calculating measurements. In some cases they are directed to specific tutorials aimed at refining deficient skills so they will do better the next time.

SPARKS gives students as many “do-overs” as necessary to master the skills in the module. You might not think students want to hear about the error of their ways but they do! In our preliminary research, we have observed students working to perfect their scores one step at a time.

We score student performance on 12 different measures. While we care about whether or not they give the correct answers and how long it takes to complete the assessment, our rubric also awards some points for partially correct answers. It takes off points if a student fails to report the right number of significant digits. The reports go beyond merely looking at a student’s answers. We also analyze student actions and point out process errors, such as incorrect or unsafe settings of test equipment. And we take into account the fact that an incorrect answer in an early step of a process may affect a student’s later answers. Our rubric gives partial credit, for example, to the student who gets the rated resistance wrong but then correctly calculates the tolerance range, even though it was based on the initial incorrect value.

When “failure” means success

We recently piloted the Measuring Resistance module with students in introductory electronics classes at a high school and a two-year college. Our purpose went beyond identifying software bugs and problems in the user interface (though, thanks to perceptive students, we found both). We gathered student feedback on the overall experience. Did the activity teach students anything? Were they able to improve their scores over multiple trials? Would they want to use SPARKS assessments again?

The answer to all three questions is yes. Yes, students learned; yes, they improved their scores with repeated attempts; and, yes, they would use the assessments again. In fact, one student asked if he could use the software at home to practice on his own—exactly the response we had hoped for!

During pilot software testing at Tidewater Community College, students vied with each other to see who would be the first to get a perfect score. Students ran the activity multiple times in the hope of improving their performance with each new resistor. The table in Figure 4 shows one student’s dramatic improvement over multiple trials with four different resistors.

At Minuteman Career and Technical High School in Lexington, Massachusetts, we observed a class of electronics students as they worked through the resistor measurement module, interacting with them at key points in the process. We gave them the types and depth of feedback they needed in order to understand the mistakes they had made. We also provided hints so they could do better the next time. With the right feedback students were able to improve their performance in subsequent trials. And their feedback to us has led to significant improvements in the reports. Students not only want to know where they went wrong, they have good ideas about what they need to know to get better.

Virtual monitoring and mentoring

Without guidance and support, learning new skills can be a frustrating experience. Designed to be used with or without human mentoring, the SPARKS software guides students by giving them instant responses and comprehensive feedback. Circuits and oscilloscopes cannot tell a student why he got the wrong answer or how to avoid shorting out the power supply. The SPARKS simulations do that and more. Our students, in turn, are showing that given constructive feedback they are willing to practice a procedure over and over. With SPARKS, doing it wrong is just the first step toward learning how to do it right.

Trudi Lord (tlord@concord.org) is the SPARKS project Manager.

Paul Horwitz (phorwitz@concord.org) directs the project.