Can They Do It or Do They Just Know How to Do It?

At first glance it seems obvious that simulation-based performance assessments are preferable to traditional question-and-answer tests as a way of assessing students’ understanding, particularly in technical areas. Unlike more static items, simulations provide opportunities to observe student behavior and reasoning in cognitively rich contexts that mirror the complexity of the real world. Using simulations one can assess more than memorization and superficial test-taking skills by confronting students with incompletely defined problems requiring multiple steps for their solution and often affording more than one satisfactory outcome.

But simulation-based challenges of this kind are expensive to create and require extensive and customized research to administer and score effectively. This raises the question whether they really capture aspects of students’ learning that are inaccessible to a traditional assessment. It is conceivable, after all, that a student’s answers to a suite of cleverly designed questions might act as reliable markers, accurately reflecting the knowledge, understanding, and skills we wish to measure in that student. How likely this is may depend on the particular content we wish to assess. Take, for example, the following scenario:

Imagine that you’re learning how to cook a soufflé. The instructor shows you how to make the sauce, how to separate the egg yolks from the whites, how to get the timing just right. You take careful notes. You remind yourself never to open the oven door while the soufflé is baking. You dutifully emphasize the importance of preheating the oven and placing the soufflé in its exact center. The time comes for the final exam. You re-read your notes and memorize various recipes. The written exam consists of a single question: “In your own words, explain how to cook a perfect soufflé.” You’re well prepared and you receive an “A” on this test. But the next day you take the practical examination, in which you are challenged to make a soufflé, something you have never done in class. Even though on paper you appeared to know every detail of how to make one, your soufflé fails to rise and comes out a gooey mess. As an excellent student who has worked hard in the course, you are as surprised as anyone at your dismal performance. How could you ace the written test and do so badly on the real one?

Does this make-believe story sound unlikely to you or does it seem self-evident that “book knowledge” alone cannot make one a good cook? And what would you say about a good electronic technician? Is there a difference between the ability to troubleshoot a circuit and the ability to correctly answer questions about troubleshooting a circuit?

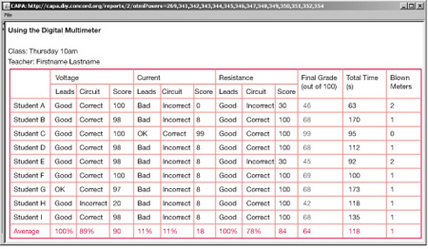

In the Computer-Assisted Performance Assessment (CAPA) project, funded by the National Science Foundation, we have been examining just that question. We have created realistic computer simulations of electronic circuits and test equipment; with these simulations we have developed interactive assessments that challenge students to make measurements and troubleshoot circuits. As the students work on these tasks, the computer keeps track of everything they do, and when they’re finished, it reports on their performance. The reports, which are intended both for the student and for the instructor, not only indicate whether or not a student was able to accomplish the task, but also contain information on how she went about it. If the student did something wrong, the computer will point it out; if she left out a critical step, that too will be observed and reported.

In addition, for each of our simulation-based performance assessments we also gave students a multiple-choice test that asked them how they would perform the task. We have repeated this experiment with three different groups of students from high school, two-year colleges, and four-year colleges. In each case the students performed better on the question-and-answer test than on the corresponding performance assessment—in other words, just as our fictional culinary arts student could recall the instructions for making a soufflé, but couldn’t make one, the electronics students in our study are significantly more successful at answering questions about how to do something than they are at actually doing it.

This finding, which we continue to replicate and study, poses a potentially serious problem for training the nation’s technical force. The vast majority of technicians in electronics and other fields are tested using paper-and-pencil tests—often multiple-choice tests—presumably because they are so easy to score. On the basis of such tests, both large and small companies hire “certified” men and women, only to discover that they need considerable further training to master the real-world skills required for their jobs. The resulting expense is a drain on the resources of such firms, and has a negative impact on the nation’s ability to compete in a global economy.

What’s going on?

Students take our assessments at a point in the introductory electronics course where both they and their instructors agree that the challenges we pose are ones they should have already mastered. And, as we have seen, they are generally able to answer questions about such tasks better than they can actually perform them. But why?

The answer might lie in the greater directedness of a question-and-answer test compared to a corresponding performance assessment, which tends to be more open-ended. As we developed our multiple-choice tests we were forced to ask relatively fine-grained questions. For instance, in order to assess whether students knew how to measure the current in a circuit, we posed separate questions about where to place the multimeter leads, whether a switch should be open or closed, and what setting to put the meter on; the corresponding performance assessment simply provided students with a simulated multimeter and watched to see what they do with it. This task places greater demands on executive function, which might explain why they did relatively poorly on it.

But new data suggests that the difference in task granularity does not account for the students’ different performances in the two testing modalities. In a recent experiment we taught 13 students in an introductory electronics course how to measure the current in a circuit. The instruction consisted of a lecture and a demonstration using an actual circuit and meter, and it made a point of warning students always to insert the meter directly into the circuit, so as not to draw too much current and blow out the fuse. A few days later the class was asked to write a short essay describing how to measure current. Ten students answered the question correctly. Later the same day, after a short break for lunch, the class reconvened and the students were given a real circuit and asked to measure the current. This time three of the students were able to complete the task correctly; the other 10 blew out the fuse!

Are we really leaving children behind? How can we tell?

The No Child Left Behind (NCLB) Act attempts to measure the achievement of schools, teachers, and students by asking questions and evaluating answers. This seems like a reasonable approach, but—at least in the field of electronics—the CAPA project is casting doubt on its validity by demonstrating that students’ ability to answer questions may not be a reliable indicator of their relevant knowledge, skills, or understanding. This finding is not truly unexpected; NCLB has been criticized, in fact, precisely because it encourages educators to “teach to the test,” the implication being that the skills required to succeed on question-and-answer assessments are not necessarily those that are important outside of school. The ultimate significance of performance assessments may lie in the fact that they are hard to “fool” by the application of narrow test-taking techniques irrelevant to the learning we are trying to assess. The introduction of such a realistic assessment methodology would make it possible to embrace accountability without trivializing educational goals.